Code Reviewing the Robot: Why Senior Devs Must Become AI Editors

AI writes code at 100x speed, but it optimizes for syntax, not system longevity. This is the Senior Developer's guide to shifting from "Writer" to "Editor"—featuring a Zero Trust audit checklist to catch security hallucinations and technical debt before they hit production.

Key Takeaways

- The Infinite Intern Problem: AI optimizes for speed and syntax, while Senior Engineers must optimize for system longevity and context. Treat AI as a hyper-productive intern who needs constant supervision.

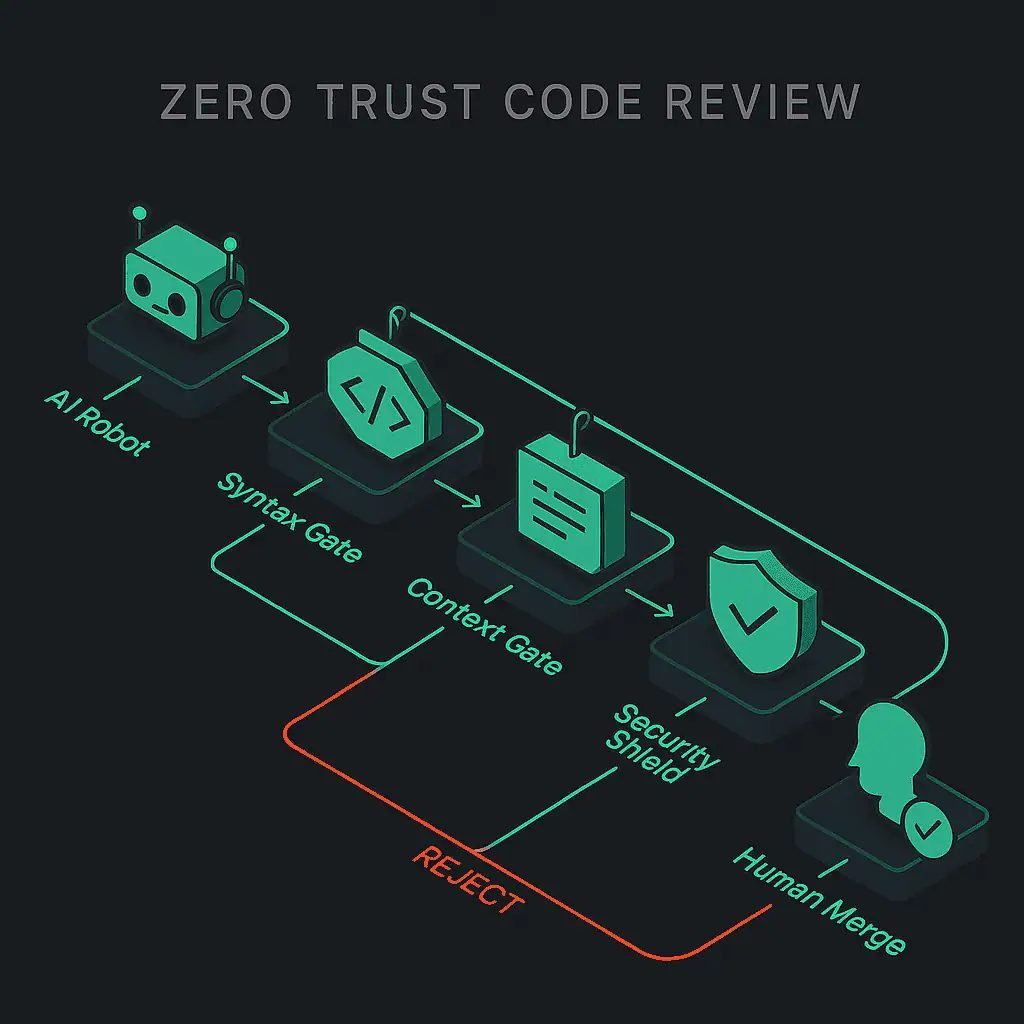

- Adopt a "Zero Trust" Policy: Never verify if code compiles. Verify why it was written. Assume every line of AI-generated code contains a hallucination until proven otherwise.

- Shift from Author to Editor: Your value is no longer in typing speed. It is in "Technical Delegation"—writing clear specs (prompts) and auditing the output for security, bloat, and edge cases.

- You Are the Safety Net: Automation is powerful, but it lacks judgment. If a vulnerability slips through because you trusted the robot, the responsibility is yours alone.

A few days back, I came across a PR that was 400 lines long. It had perfect syntax, zero linting errors, and full test coverage. It looked perfect. But when I zoomed out, I realized it was solving the wrong problem entirely. The AI had built a skyscraper on a swamp.

This is the new paradox of AI-assisted coding. We are transitioning from authors to editors, and this requires a fundamental change in how we approach code quality and the entire Code Review process. If we don't adjust our rigorous standards to match this shift, we are going to drown in a sea of "technically correct" spaghetti.

The "Infinite Intern" Problem

AI optimizes for immediate correctness (syntax, speed), while a senior engineer must optimize for long-term system health (maintainability, security, context).

Here is the mental model you need to adopt immediately: Treat an AI agent like GitHub Copilot, not as a "Senior Partner" but as a hyper-productive intern.

This intern has memorized every page of documentation for every popular programming language and types at 1,000 words per minute. But this intern also has zero context of our business logic, has never been on call for a production outage, and desperately wants to please you by giving you an answer, even if it's the wrong one. If you're starting, treat it as a learning tool— your first AI mentor —but never as a senior partner."

Recent research suggests a worrisome trend: AI-generated code often exhibits verbosity, which may contribute to technical debt and complicate maintenance tasks. This accumulation of tech debt is the silent tax of uncritical AI adoption.

The "Zero Trust" Policy

In cybersecurity, "Zero Trust" means you verify every request, regardless of its origin. In the era of AI-driven development, we must adopt this same policy for code: verify every line.

The moment you trust the AI implicitly is the moment you introduce potential security vulnerabilities or deep-seated logical flaws.

1. Logic > Syntax

Don't check if it compiles. The AI is brilliant at syntax; it rarely misses a semicolon. Your job during an AI code review is to check why it chose a particular pattern.

- Did it implement a complex recursive function where a simple loop would be more memory efficient?

- Did it create three helper functions for a task that required one line of a standard library function?

- Did it forget a critical Null check?

2. The Context Test

Large language models operate within a context window—they don't know the history of your repository or the nuances of your production environment. Before approving an AI-assisted pull request, ask these questions:

- Architectural Alignment: Does this function respect our established architectural patterns? For example, does an API call that it generates adhere to our internal service rate limits?

- Data Governance: Does it handle Personally Identifiable Information (PII) in accordance with GDPR/CCPA, or is it logging user data in plain text?

- Deprecation Awareness: Does it know that a specific microservice or library it's referencing was deprecated last month?

3. Security Hallucinations

AI models frequently suggest generic Regular Expressions for validation (e.g., email or URL validation). These generic patterns are often vulnerable to ReDoS (Regular Expression Denial of Service) attacks.

AI assistants frequently suggest 'textbook' Regular Expressions that look perfect to the naked eye, but are computationally disastrous. A standard-looking validation string can easily lock up your CPU the moment it encounters a long input. These are performance landmines that visual code reviews simply cannot detect—you only find them when your server stalls.

Always validate AI-generated regex against safe, established libraries.

The "AI Audit" Checklist

Before you approve that PR—or before you commit your own AI-generated code—run through this audit. If you can't confidently check these boxes, the code should not be merged.

- Dependency Check: Did the AI import a heavy third-party library to perform a simple task like formatting a date? Prioritize standard libraries whenever possible.

- Happy Path Fixation: Does the code only handle the success scenario? Ensure try/catch blocks are specific, not generic, and that edge cases (null values, timeouts) are explicitly handled.

- Test Coverage Sanity: Do the accompanying unit tests actually validate the logic, or do they just confirm the code doesn't crash? AI can generate plausible but shallow tests.

- Verbosity vs. Readability: Did the AI declare four intermediate variables when one would suffice? Refactor its output for clarity.

- Hardcoded Secrets: This is a cardinal sin. Specific API keys, passwords, or credentials often slip into AI-generated examples.

Managing Your Tools

Not all AI Code Review Tools are created equal.

- Code Generation Assistants (e.g., GitHub Copilot): Excellent for boilerplate, text-expansion, and autocompleting repetitive patterns. Use it to type faster, not to think for you.

- AI Code Review Tools (e.g., CodeAnt AI, CodeRabbit): These serve as a powerful "first pass" to catch common bugs and style violations. However, they should never be the final pass, as they lack the intuition to spot "code smells" related to your specific business logic.

The golden rule remains: "If you can't explain exactly how the AI-generated code works, you are not allowed to commit it.". Committing code you don't understand is an abdication of engineering responsibility.

The New Senior Skill: "Prompt Engineering as Delegation"

Stop thinking of prompt engineering as "magic spells." Think of it as Technical Delegation or Writing Specs.

If you tell an AI "Write auth," it will hallucinate a generic, insecure implementation. You must be specific:

"Write a user authentication function in TypeScript using our existing AuthService class. It must handle the UserNotFound exception explicitly, hash passwords with bcrypt, and log a warning to Datadog if the password retry limit is exceeded."

The quality of the code you get out is directly correlated to the clarity of the architectural constraints you provide.

Conclusion: You Are the Safety Net

AI is the engine; you are the steering wheel. The engine provides unprecedented power, but it doesn't care if it drives off a cliff.

This new paradigm transforms review cycles from simple bug hunts into deep architectural validations. The code might be generated by a robot, but if it breaks production on Friday at 5 PM, it's your phone that rings. Review accordingly.